Mesos Architecture in Brief

Incentive

Before coming to Mesosphere as an intern, I read the mesos paper and have a general understanding of it’s architecture, well none of these understanding is based on actual code. After 1.5 month in Mesosphere, contributing some code to the project, it’s necessary to connect both the general flow and code logic together.

Background

Mesos Architecture

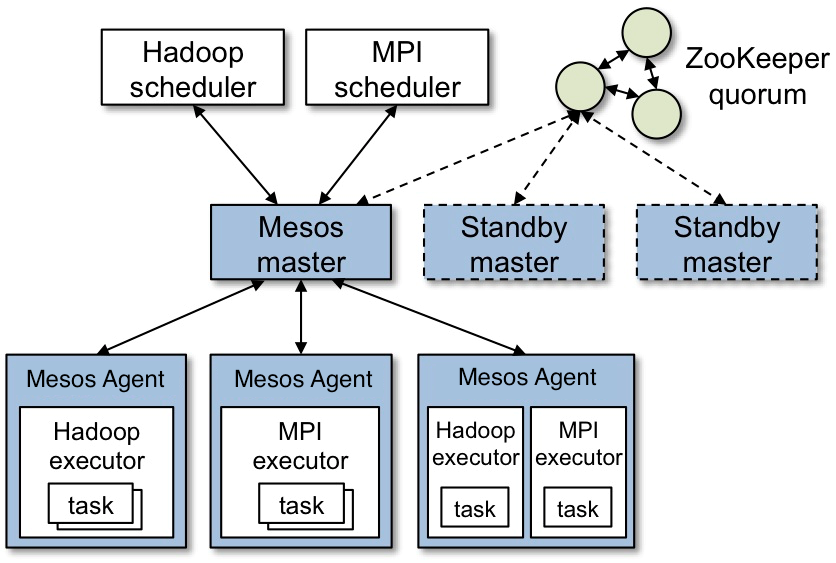

There has been a pretty well explanation of different roles and relationships between these important components in Mesos.

In brief, we have several important concepts: Master, Agent/Slave, Framework (consisting of Scheduler and Executor). Master and Agent nodes are physical or virtual machines in datacenter. Master node is responsible for scheduling tasks (this “task” is our general understanding of task, meaning “a specific work to do, such as running a Spark job”, which is different from the definition of “task” in Mesos project), Agent node is responsible for running task. In order to scheduler and execute tasks in this distributed system, it’s necessary to have corresponding parts on both Master and Agent doing scheduling and executing work respectively. We call it Framework as a whole. The part that’s working on the Master node is called scheduler, the other part working on the Agent node is called executor. From this perspective, it’s pretty easy to understand how do these two names come from.

Since there might be multiple kind of tasks to run, we sometimes need to run multiple Frameworks (thus multiple schedulers, executors components) on Mesos, thus on the Master node, there might multiple schedulers, on Agent node, there might be multiple executors.

A Little Bit Further

What we have explained is general overview of Mesos architecture, having no relationship with actual implementation. If we look into the actual implementation, we need to know more background.

Libprocess

Inside the distributed system, one of the most important component we need is the communication mechanism between nodes or even processes running on nodes. Libprocess is what we implement and use inside Mesos. It abstracts the communication and event flow through actor programming style . In Libprocess, the fundamental entity is “Process”, which is an “actor” in actor programming style, no matter if two Processes are on the same node or different nodes, Libprocess provide asynchronous event handling between them. Thus it’s much easier for us to write logic, transfer information without worrying about which node the process is running. More specific programmer guide for Libprocess can be found here and here (in Chinese).

In our case we don’t have to know much detail about how Libprocess is implemented or used, we only need to know that in order to communicate inside Mesos, we can register handlers for certain message type and then handle them.

Flow

Then, let’s take a look at how Mesos makes it possible for a single task to be executed on the cluster with our given resource requirement.

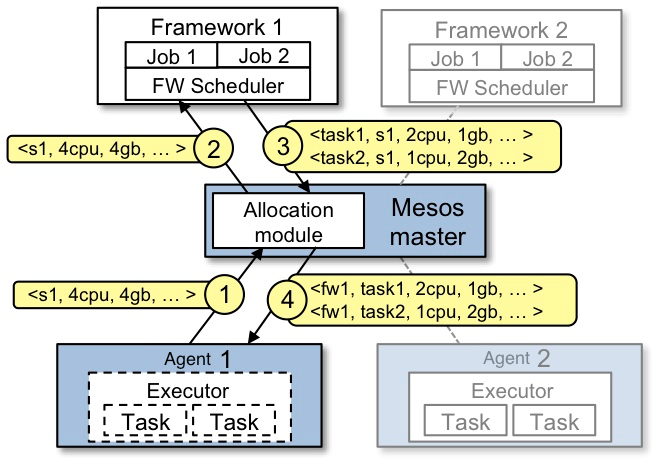

Here is a diagram about how resource are offered and finally allocated for a specific task. In brief, there are 4 steps:

- Agent report resource (how many CPU, Memory you have) to Master

- Master offer the resource as resource Offers to Framework (specifically the scheduler running on Master)

- The Framework either reject (then the resource will be offered to other Frameworks) or accept the resource (then it should reply how many resource it will use, that task it wants to run)

- Given the accepted offer and related tasks (this is the “task” definition inside Mesos, it’s simpler, meaning a work that’s run on a node), the Master will send the task to the Agent from whom the resource is offered, specifically, the executor running on that Agent will execute the task and manage the status of the task.

With these 4 steps, we can have a general overview of how the whole procedure is managed. Let’s look into the code, finding the actual implementation.

In the section below, I’ll use function signature or short explanation instead of line numbers to represent the critical logic for the procedure, since line numbers might be changed. You can directly find these code by searching the function signature. And the hierarchical structure of these function signature means which logic is inside another or the inner logic is called by the function outside.

Critical Steps

- Agent report/register resources to Master

* Master launch: void Master::initialize() * Master register message handlers: install<RegisterSlaveMessage>( * Slave launch: void Slave::initialize() * detect resource: Try<Resources> resources = Containerizer::resources(flags); * Slave detected master: void Slave::detected( * Slave::doReliableRegistration * send RegisterSlaveMessage * Master receive and handle SlaveRegisterMessage: void Master::registerSlave( * void Master::__registerSlave( * add SlaveInfo to current slave list - Master send resource offer to framework (specifically the scheduler)

* Master::offer( * send ResourceOffersMessage - Framework accept the offer

* sched process receive the OFFER event (ResourceOffersMessage can be translated into OFFER event) * src/sched/sched.cpp: case Event::OFFERS: { * call void resourceOffers( * scheduler->resourceOffers( * this function is to be implemented by specific scheduler, here we use test_http_framework.cpp as example * launch task that’s inside staging list * send ACCEPT Call * Master receive Accept Call: Master::accept( * validation * authorization * send RunTaskMessage: void Master::_accept( - Slave launch the task on it’s executor

* Slave receive RunTaskMessage * Slave::runTask( * Slave::run( * Executor launch the task * Executor::addTask( * Executor::launchTask( * this is to be implemented by specific executor

From the analysis above, it’s pretty easy to know the whole procedure for proposing offers, generating tasks and finally get them executed on the node. And we can notice that two parts (scheduler, executor) contains code that can be implemented by the user. These two parts are what we should do if we want to implement a Framework that make it possible for own distributed application to run on Mesos. With Mesos, it abstract all these resource management, task scheduling work for us, then we don’t have to worry about these aspect when we are developing or using new distributed applications.

And I am not sure if you can find, it’s pretty easy to follow the logic or find certain logic by first looking at the function definition in .hpp file of master or slave, and then search for it’s reference, most of times, it will be pointing to a message handler, then we can search for that message type name and find where the message is generated. This will almost give us a complete view of the logic flow.